Coral Edge TPU¶

Introduction¶

Coral helps you bring on-device AI application ideas from prototype to production. They offer a platform of hardware components, software tools, and pre-compiled models for building devices with local AI. - coral.ai

The Coral Mini PCIe accelerator can be attached to our QSBASE3 modules, meaning QSXM and QSXP. - https://coral.ai/products/pcie-accelerator

You have two options using it:

Download the karo-image-ml fitting your module from our Download Area.

To compile the image yourself use NXP Yocto BSP Guide and compile

karo-image-ml.

Setup¶

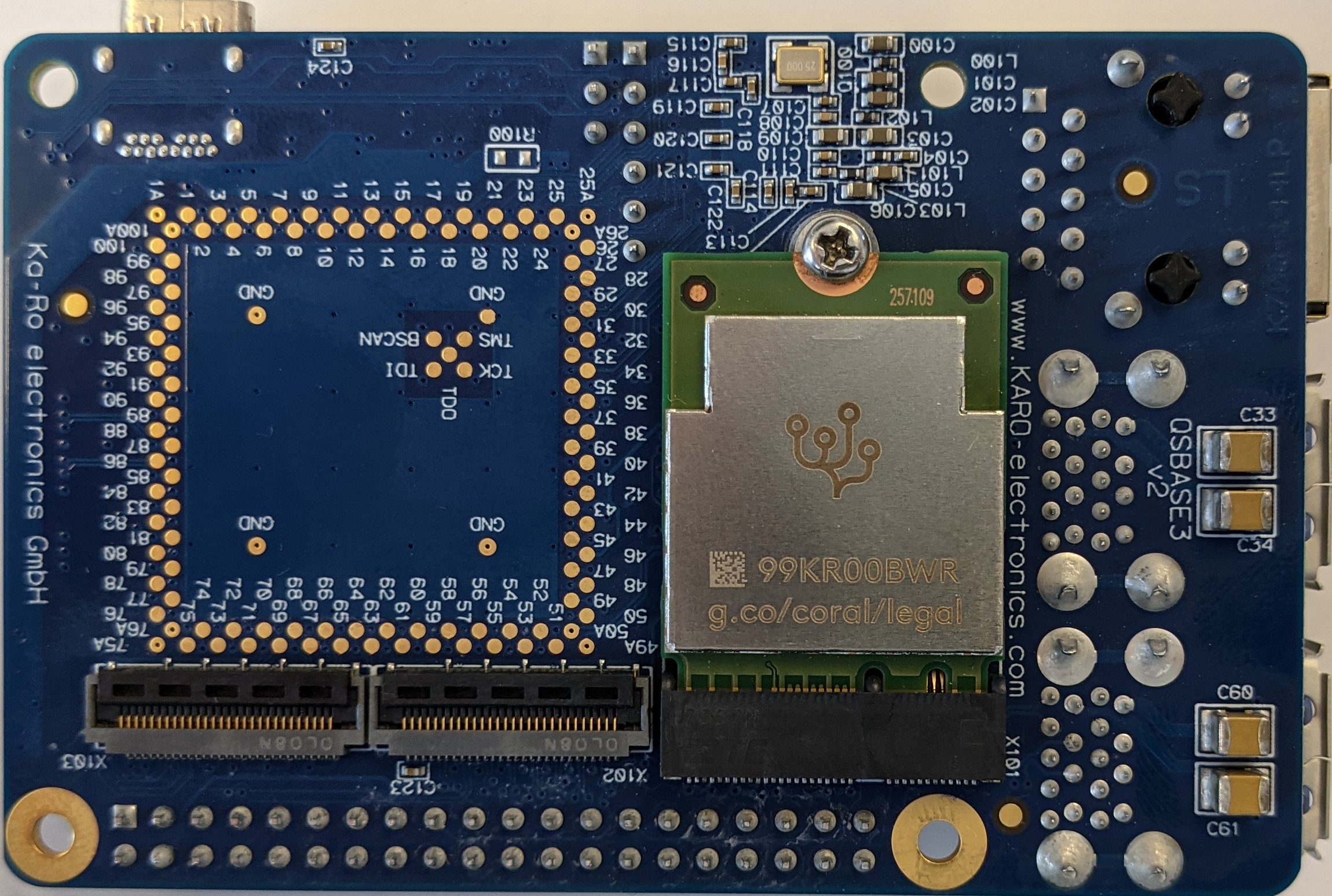

Mount the Coral PCIe module to our QSBASE3 baseboard:

Getting Started [1]¶

karo-image-ml¶

For the following steps you will need karo-image-ml rootfs on your board.

You can compile it with NXP Yocto BSP Guide or get it from our Download Area.

Note

PCIe has to be enabled in devicetree.

QSXM:

&pcie0 {

status = "okay";

};

QSXP:

&pcie {

status = "okay";

};

&pcie_phy {

status = "okay";

};

PyCoral Library¶

PyCoral is a Python library built on top of the TensorFlow Lite library to speed up your development and provide extra functionality for the Edge TPU.

It’s recommend to start with the PyCoral API, because it simplifies the amount of code you must write to run an inference. But you can build your own projects using TensorFlow Lite directly, in either Python or C++.

To install the PyCoral library into our karo-image-ml rootfs use the following command:

pip3 install --extra-index-url https://google-coral.github.io/py-repo/ pycoral

Tip

If you’re receiving any SSL/certificate errors make sure your module has set the correct time.

ntpdate -s -u <ntp-server>

Run a Model on TPU¶

Follow these steps to perform image classification with example code and MobileNet v2:

Download the example code from GitHub:

mkdir coral && cd coral

git clone https://github.com/google-coral/pycoral.git

cd pycoral

Download the model, labels, and bird photo:

bash examples/install_requirements.sh classify_image.py

Run the image classifier with the example bird photo:

python3 examples/classify_image.py \

--model test_data/mobilenet_v2_1.0_224_inat_bird_quant_edgetpu.tflite \

--labels test_data/inat_bird_labels.txt \

--input test_data/parrot.jpg

You should see a result like this:

W :131] Could not set performance expectation : 4 (Inappropriate ioctl for device)

----INFERENCE TIME----

Note: The first inference on Edge TPU is slow because it includes loading the model into Edge TPU memory.

25.9ms

7.9ms

7.9ms

7.9ms

7.9ms

-------RESULTS--------

Ara macao (Scarlet Macaw): 0.75781

Hint

The “Could not set performance expectation” warning comes from the linux-imx Kernel apex module. See https://github.com/google-coral/libedgetpu/issues/11

Next Steps¶

You can continue with the official Coral documentation.

https://coral.ai/docs/m2/get-started#next-steps

References